Brief by Shorts91 Newsdesk / 05:37am on 03 Mar 2026,Tuesday Tech Today

OpenAI CEO Sam Altman acknowledged that OpenAI acted opportunistically and rushed a Pentagon deal, following the termination of Anthropic’s contract. He said the move was intended to prevent further escalation between the US Defense Department and the AI industry and admitted the approach was “opportunistic and sloppy.” OpenAI has since updated its Pentagon contract to clarify its “principles,” and Altman insisted future decisions will be more cautious. The development followed a surge in ChatGPT uninstalls, with Claude downloads rising as users shifted to competitors. Altman emphasized safeguarding civil liberties, stating the agreement prohibits mass domestic surveillance and NSA use without a contract modification. (PC: X)

Brief by Shorts91 Newsdesk / 01:04pm on 21 Feb 2026,Saturday Tech Today

The US, Russia and China were among more than 80 nations that signed the New Delhi Declaration at the AI Impact Summit 2026. In total, 88 countries and international groups endorsed the pact. The declaration stresses national sovereignty, wider access to AI and stronger global cooperation. It says the benefits of Artificial Intelligence must be shared equally. “The benefits of AI must be equitably shared across humanity,” the statement said. The summit was held in New Delhi from February 18 to 20. It drew over five lakh visitors. Union IT Minister Ashwini Vaishnaw called it a “grand success” and said India secured over USD 250 billion in investment commitments. (PC: X)

Brief by Shorts91 Newsdesk / 01:31pm on 20 Feb 2026,Friday Tech Today

India's first AI-powered air taxi, an electric Vertical Take-Off and Landing (e-VTOL) aircraft, was showcased at the India AI Impact Summit 2026 at Bharat Mandapam. Developed by The E Plane Company in collaboration with IIT Madras, the aircraft requires no runway, runs entirely on battery, and can cover 36 km in just eight minutes at an estimated fare of Rs 1,700. It supports multi-stop capability on a single charge and operates below 120 decibels. The airframe and body are fully manufactured in India, with DGCA Design Organisation Approval already secured. Air ambulance services are planned for Bengaluru, Chennai, Pune, Mumbai and Ahmedabad by September–October 2027, ahead of commercial air taxi operations. (PC: NDTV)

Brief by Shorts91 Newsdesk / 02:10pm on 19 Feb 2026,Thursday Tech Today

An awkward moment took place during a group photo at the India AI Impact Summit in New Delhi. OpenAI CEO Sam Altman and Anthropic CEO Dario Amodei chose a raised fist bump instead of holding hands. The two lead rival AI firms. They appeared unsure for a moment before settling on the gesture. The clip drew online reactions. One tech founder wrote, “When AGI? The day Dario and Sam hold hands.” Prime Minister Narendra Modi stood at the centre of the lineup. Other global tech leaders were also present. At the event, Amodei said AI may soon exceed “most humans in most domains.” Altman said India is leading in AI adoption. (PC: NDTV)

Brief by Shorts91 Newsdesk / 06:15am on 19 Feb 2026,Thursday Tech Today

India’s homegrown Sarvam AI is a next-generation artificial intelligence model built to understand and process Indian languages and contexts. Developed by a Bengaluru-based startup, the model supports 22 official Indian languages and performs tasks such as image captioning, speech recognition, scene text reading, and document understanding. At the India AI Impact Summit 2026, Google CEO Sundar Pichai praised the initiative, highlighting India’s growing AI capabilities. Reports suggest Sarvam AI has outperformed leading global systems in select Indian language benchmarks, marking a major step toward sovereign AI development and improved digital accessibility across India.

Brief by Shorts91 Newsdesk / 12:41pm on 18 Feb 2026,Wednesday Tech Today

Google and Alphabet CEO Sundar Pichai said India is a global model for making AI accessible to more people. He spoke at a press conference in New Delhi before the India AI Impact Summit. Pichai said India’s digital systems and language diversity create a strong base for AI growth. He said the country is set for an “extraordinary trajectory” in AI. Google announced new India-focused steps. These include subsea cable links, AI tools for 10,000 schools, and support for 2 crore public servants. A new AI certificate course will be offered in English and Hindi. “AI must work across languages and local contexts,” he said.

Brief by Shorts91 Newsdesk / 12:32pm on 18 Feb 2026,Wednesday Tech Today

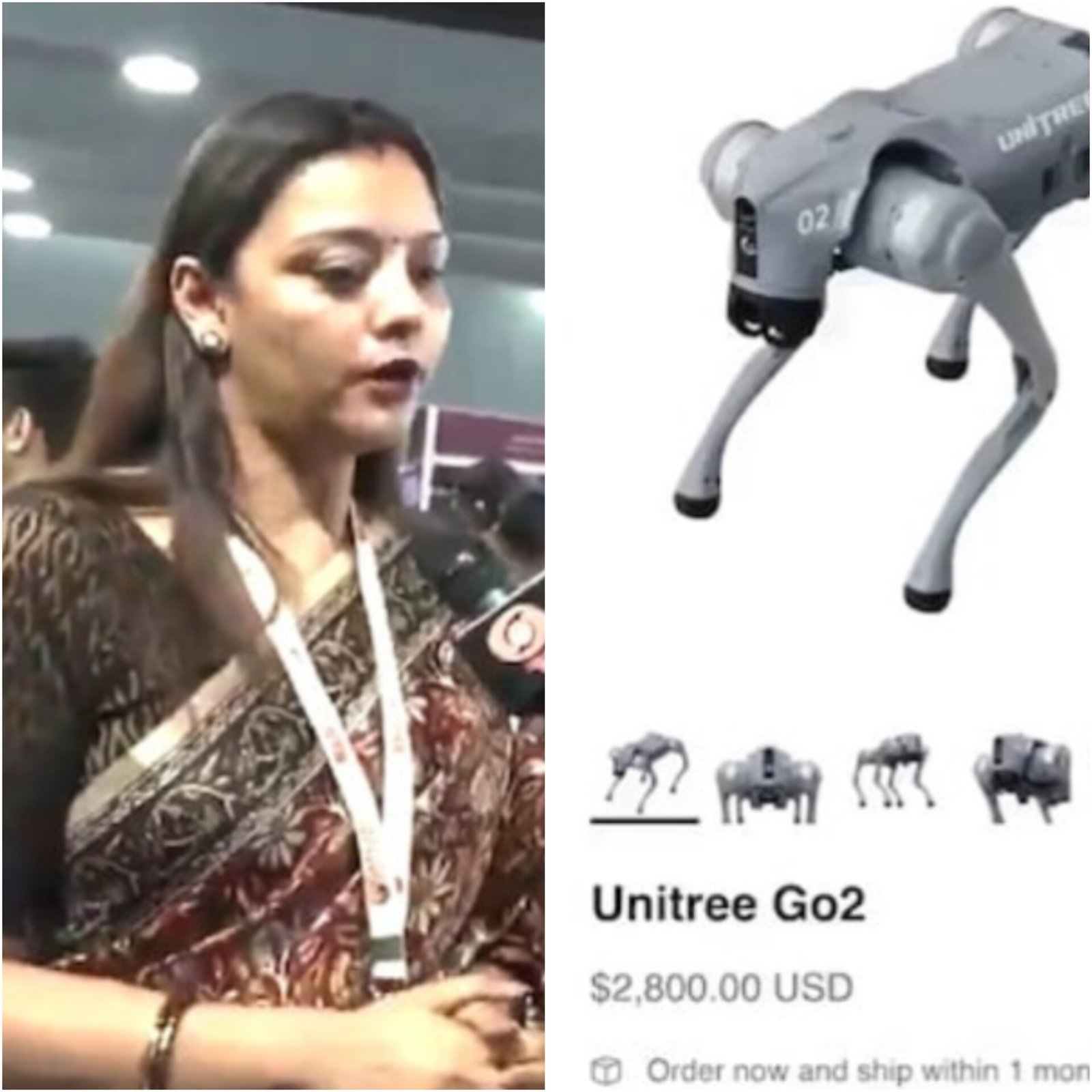

Galgotias University was asked to vacate its stall at the India AI Impact Summit after a dispute over a robotic dog display. A viral video showed staff saying the robot was built by the university’s Centre of Excellence. Social media users identified the machine as a Chinese-made Unitree Go2 sold online. The robot was presented at the expo under the name “Orion.” The university later said it only purchased the robot for training and never claimed to have built it. “It is a classroom in motion,” the university said in a statement on X. A community note said earlier on-camera claims showed otherwise.

Brief by Shorts91 Newsdesk / 10:56am on 18 Feb 2026,Wednesday Tech Today

Galgotias University vacated its stall at the India AI Impact Summit in New Delhi after a controversy over a robotic dog displayed at its booth, sources said. The device shown as part of a campus AI project was identified online as a Chinese-made Unitree Go2 robot. A professor had described it as developed under the university’s AI initiative. The university later said it never claimed it built the robodog and called it a teaching tool. An X community note disputed this clarification. Professor Neha Singh said, “We cannot claim that we manufactured it.” Registrar Nitin Kumar Gaur called the issue a communication mix-up. (PC: India Today)

Brief by Shorts91 Newsdesk / 08:22am on 18 Feb 2026,Wednesday Tech Today

Google CEO Sundar Pichai met Prime Minister Narendra Modi on Wednesday on the sidelines of the India AI Impact Summit 2026 at Bharat Mandapam, New Delhi. The two discussed Google's ongoing and future collaborations in AI research, innovation, and applied sciences. PM Modi announced India will "more than double" its GPU capacity within six months. The five-day summit, running February 16–20, brings together global policymakers, tech CEOs, and researchers. Key highlights include the launch of India's homegrown AI wearable glasses, Sarvam Kaze, TCS committing $2 billion to AI research, and a $540 million Bridgewater Associates investment into AI startup Edgelingo. Modi is scheduled to meet over a dozen world leaders on the summit's sidelines.

Brief by Shorts91 Newsdesk / 05:37am on 18 Feb 2026,Wednesday Tech Today

Google and Alphabet CEO Sundar Pichai has reached India to attend the AI Impact Summit in New Delhi, where he will give the keynote address on February 20. After landing, Pichai shared a message on X saying, “Nice to be back in India for the AI Impact Summit.” The five-day event began on February 16 at Bharat Mandapam and is organised under the IndiaAI Mission and the Ministry of Electronics and IT. The summit focuses on responsible and inclusive use of artificial intelligence. Several global tech leaders and policymakers are taking part. Pichai said Google wants to be a long-term AI partner for India. (PC: HT)